CentOS7.6搭建RAC

1.系统环境配置

1.1概述

搭建两个节点的rac集群,其每个节点均有两个网卡,public网卡和private网卡。两个节点的主机名分别为rac1和rac2

1.2 参数设置(RAC1&RAC2)

-

编辑/etc/hosts文件

vim /etc/hosts注释掉以下内容,

#127.0.0.1 localhost rac1 localhost4 localhost4.localdomain4 #::1 localhost rac1 localhost6 localhost6.localdomain6注:如果没有注释上述内容,在安装rac的先决条件检查时,会报PRVG-13632错误。

追加以下内容

#RAC-PUBLIC-IP 192.168.100.101 rac1 192.168.100.102 rac2 #RAC-PRIVATE-IP 10.10.10.101 rac1-priv 10.10.10.102 rac2-priv #RAC-VIP 192.168.100.201 rac1-vip 192.168.100.202 rac2-vip #RAC-SCAN-IP 192.168.100.200 rac-scan

1.3 关闭防火墙

- 关闭防火墙服务

systemctl disable firewalld.service

-

selinux配置

vi /etc/selinux/config把enforcing更改为disabled

# This file controls the state of SELinux on the system. # SELINUX= can take one of these three values: # enforcing - SELinux security policy is enforced. # permissive - SELinux prints warnings instead of enforcing. # disabled - No SELinux policy is loaded. SELINUX=disabled # SELINUXTYPE= can take one of three values: # targeted - Targeted processes are protected, # minimum - Modification of targeted policy. Only selected processes are protected. # mls - Multi Level Security protection. SELINUXTYPE=targeted -

挂载系统光盘

-

配置本地yum源

mkdir /yums cd /run/media/root/CentOS 7 x86_64/Packages cp * /yums rpm -ivh deltarpm-3.6-3.el7.x86_64.rpm rpm -ivh python-deltarpm-3.6-3.el7.x86_64.rpm rpm -ivh createrepo-0.9.9-28.el7.noarch.rpm cd /yums createrepo . vi /etc/yum.repos.d/yum.local.repo[local] name=yum local repo baseurl=file:///yums gpgcheck=0 enable=1 -

安装 依赖包

yum install -y bc yum install -y compat-libcap1* yum install -y compat-libcap* yum install -y binutils yum install -y compat-libstdc++-33 yum install -y elfutils-libelf yum install -y elfutils-libelf-devel yum install -y gcc yum install -y gcc-c++ yum install -y glibc-2.5 yum install -y glibc-common yum install -y glibc-devel yum install -y glibc-headers yum install -y ksh libaio yum install -y libaio-devel yum install -y libgcc yum install -y libstdc++ yum install -y libstdc++-devel yum install -y make yum install -y sysstat yum install -y unixODBC yum install -y unixODBC-devel yum install -y binutils* yum install -y compat-libstdc* yum install -y elfutils-libelf* yum install -y gcc* yum install -y glibc* yum install -y ksh* yum install -y libaio* yum install -y libgcc* yum install -y libstdc* yum install -y make* yum install -y sysstat* yum install -y libXp* yum install -y glibc-kernheaders yum install -y net-tools-* yum install -y iscsi-initiator-utils yum install -y udev yum install -y xclock*手动上传以下依赖包并安装

rpm -ivh cvuqdisk-1.0.10-1.rpm rpm -ivh rlwrap-0.37-1.el6.x86_64.rpm yum install -y compat-libstdc++-33-3.2.3-72.el7.x86_64.rpm yum install -y kmod-20-21.el7.x86_64.rpm yum install -y kmod-libs-20-21.el7.x86_64.rpm -

关闭透明大页

cp /etc/default/grub /etc/default/grub.bak vi /etc/default/grub追加一行

GRUB_CMDLINE_LINUX="rd.lvm.lv=centos/root rd.lvm.lv=centos/swap rhgb quiet transparent_hugepage=never"

GRUB_CMDLINE_LINUX="rd.lvm.lv=centos/root rd.lvm.lv=centos/swap rhgb quiet transparent_hugepage=never" GRUB_TIMEOUT=5 GRUB_DISTRIBUTOR="$(sed 's, release .*$,,g' /etc/system-release)" GRUB_DEFAULT=saved GRUB_DISABLE_SUBMENU=true GRUB_TERMINAL_OUTPUT="console" GRUB_CMDLINE_LINUX="crashkernel=auto rd.lvm.lv=centos/root rd.lvm.lv=centos/swap rhgb quiet" GRUB_DISABLE_RECOVERY="true" GRUB_CMDLINE_LINUX="rd.lvm.lv=centos/root rd.lvm.lv=centos/swap rhgb quiet transparent_hugepage=never" -

内核参数配置

vi /etc/sysctl.confkernel.shmmax = 2126008812 kernel.shmmni = 4096 kernel.sem = 250 32000 100 128 net.core.rmem_default = 262144 net.core.rmem_max = 4194304 net.core.wmem_default = 262144 net.core.wmem_max = 1048586 fs.file-max = 6815744 kernel.shmall = 393216 net.ipv4.tcp_max_tw_buckets = 6000 net.ipv4.ip_local_port_range = 9000 65500 net.ipv4.tcp_tw_recycle = 0 net.ipv4.tcp_tw_reuse = 1 #net.core.somaxconn = 262144 net.core.netdev_max_backlog = 262144 net.ipv4.tcp_max_orphans = 262144 net.ipv4.tcp_max_syn_backlog = 262144 net.ipv4.tcp_synack_retries = 2 net.ipv4.tcp_syn_retries = 1 net.ipv4.tcp_fin_timeout = 1 net.ipv4.tcp_keepalive_time = 30 net.ipv4.tcp_keepalive_probes = 6 net.ipv4.tcp_keepalive_intvl = 5 net.ipv4.tcp_timestamps = 0 fs.aio-max-nr = 1048576 net.ipv4.conf.all.rp_filter = 2 net.ipv4.conf.default.rp_filter = 2生成系统参数

sysctl -p

注:

kernel.shmmax:

用于定义单个共享内存段的最大值。设置应该足够大,能在一个共享内存段下容纳下整个的 SGA。32 位 linux 系统:可取最大值为 4GB ( 4294967296bytes ) -1byte;64 位 linux 系统:可取的最大值为物理内存值 -1byte.

建议值为多于物理内存的一半,一般取值大于 SGA_MAX_SIZE 即可,可以取物理内存 -1byte

kernel.shmall :

可以使用的共享内存的总页数, Linux 共享内存页大小为 4KB

-

修改pam.d/login

vim /etc/pam.d/loginsession required /lib/security/pam_limits.so session required pam_limits.so -

系统资源限制配置

vim /etc/security/limits.conforacle soft nofile 1024 oracle hard nofile 65536 oracle soft nproc 16384 oracle hard nproc 16384 oracle soft stack 10240 oracle hard stack 32768 oracle hard memlock 134217728 oracle soft memlock 134217728 grid soft nofile 1024 grid hard nofile 65536 grid soft nproc 16384 grid hard nproc 16384 grid soft stack 10240 grid hard stack 32768 grid hard memlock 134217728 grid soft memlock 134217728 -

配置环境变量

vim /etc/profileif [ $USER = "oracle" ] || [ $USER = "grid" ]; then if [ $SHELL = "/bin/ksh" ]; then ulimit -p 16384 ulimit -n 65536 else ulimit -u 16384 -n 65536 fi fi生成环境变量

source /etc/profile -

添加用户和用户组、创建目录

groupadd -g 601 oinstall groupadd -g 602 dba groupadd -g 603 oper groupadd -g 604 backupdba groupadd -g 605 dgdba groupadd -g 606 kmdba groupadd -g 607 asmdba groupadd -g 608 asmoper groupadd -g 609 asmadmin useradd -u 601 -g oinstall -G asmadmin,asmdba,dba,asmoper grid useradd -u 602 -g oinstall -G dba,backupdba,dgdba,kmdba,asmadmin,oper,asmdba oracle创建用户密码

passwd grid passwd oracle创建Oracle Inventory目录

mkdir -p /u01/app/oraInventory chown -R grid:oinstall /u01/app/oraInventory chmod -R 775 /u01/app/oraInventory创建GI HOME目录

mkdir -p /u01/app/grid mkdir -p /u01/app/19c/grid chown -R grid:oinstall /u01/app/grid chmod -R 775 /u01/app/grid chown -R grid:oinstall /u01/app/19c chmod -R 775 /u01/app/19c/创建Oracle Base目录

mkdir -p /u01/app/oracle mkdir /u01/app/oracle/cfgtoollogs chown -R oracle:oinstall /u01/app/oracle chmod -R 775 /u01/app/oracle创建Oracle RDBMS Home目录

mkdir -p /u01/app/oracle/product/19c/db_1 chown -R oracle:oinstall /u01/app/oracle/product/19c/db_1 chmod -R 775 /u01/app/oracle/product/19c/db_1 -

给grid用户配置环境变量

切换到grid用户

su - grid编辑bash_profile配置文件

vim ~/.bash_profilePS1="[`whoami`@`hostname`:"'$PWD]$' export PS1 umask 022 export TMP=/tmp export TMPDIR=$TMP export ORACLE_SID=+ASM1 export ORACLE_TERM=xterm; export ORACLE_BASE=/u01/app/grid export ORACLE_HOME=/u01/app/19c/grid export NLS_LANG="AMERICAN_AMERICA.AL32UTF8" export NLS_DATE_FORMAT="yyyy-mm-dd HH24:MI:SS" export TNS_ADMIN=$ORACLE_HOME/network/admin export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib export PATH=.:$PATH:$HOME/bin:$ORACLE_HOME/bin export THREADS_FLAG=native if [ $USER = "oracle" ] || [ $USER = "grid" ]; then if [ $SHELL = "/bin/ksh" ];then ulimit -p 16384 ulimit -n 65536 else ulimit -u 16384 -n 65536 fi umask 022 fi alias sqlplus='rlwrap sqlplus' alias rman='rlwrap asmcmd'

注:以下参数RAC1和RAC2的需设置不同参数

RAC1&RAC2:ORACLE_SID=+ASM1 / ASM2

生成环境变量

source ~/.bash_profile

-

给oracle配置环境变量

切换用户

su - oracle配置bash_profile文件

vim ~/.bash_profilePS1="[`whoami`@`hostname`:"'$PWD]$' export PS1 export TMP=/tmp export TMPDIR=$TMP export ORACLE_HOSTNAME=rac1 export ORACLE_BASE=/u01/app/oracle export ORACLE_HOME=/u01/app/oracle/product/19c/db_1 export ORACLE_SID=orcl1 export NLS_LANG="AMERICAN_AMERICA.AL32UTF8" export NLS_DATE_FORMAT="yyyy-mm-dd HH24:MI:SS" export TNS_ADMIN=$ORACLE_HOME/network/admin export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib export PATH=.:$PATH:$HOME/bin:$ORACLE_BASE/product/19c/db_1/bin:$ORACLE_HOME/bin export THREADS_FLAG=native;export THREADS_FLAG if [ $USER = "oracle" ] || [ $USER = "grid" ]; then if [ $SHELL = "/bin/ksh" ];then ulimit -p 16384 ulimit -n 65536 else ulimit -u 16384 -n 65536 fi umask 022 fi alias sqlplus='rlwrap sqlplus' alias rman='rlwrap rman'注:以下参数RAC1和RAC2的需设置不同参数

ORACLE_HOSTNAME=rac1/rac2 ORACLE_SID=orcl1/orcl2生成环境变量

source ~/.bash_profile -

配置ISCSI挂载外部存储

切换到root用户

su - root安装ISCSI客户端软件

yum -y install iscsi-initiator-utils设置开机自动启动

chkconfig iscsid on查找target

iscsiadm -m discovery -t sendtargets -p 192.168.100.103手动登录target

iscsiadm -m node -T iqn.2022.04.com.rac:iscsi.disk -p 192.168.100.103 -l设置开机自动登录target

iscsiadm -m node -T iqn.2022.04.com.rac:iscsi.disk --op update -n node.startup -v automatic查看共享磁盘

cd /dev/disk/by-path ls -l /dev/disk/by-path/*iscsi* | awk '{FS=" "; print $9 " " $10 " " $11}'/dev/disk/by-path/ip-192.168.100.103:3260-iscsi-iqn.2022.04.com.rac:iscsi.disk-lun-1 -> ../../sdb /dev/disk/by-path/ip-192.168.100.103:3260-iscsi-iqn.2022.04.com.rac:iscsi.disk-lun-2 -> ../../sdc /dev/disk/by-path/ip-192.168.100.103:3260-iscsi-iqn.2022.04.com.rac:iscsi.disk-lun-3 -> ../../sdd /dev/disk/by-path/ip-192.168.100.103:3260-iscsi-iqn.2022.04.com.rac:iscsi.disk-lun-4 -> ../../sde /dev/disk/by-path/ip-192.168.100.103:3260-iscsi-iqn.2022.04.com.rac:iscsi.disk-lun-5 -> ../../sdf /dev/disk/by-path/ip-192.168.100.103:3260-iscsi-iqn.2022.04.com.rac:iscsi.disk-lun-6 -> ../../sdg查看磁盘wwid

[root@rac1 by-path]# for i in $(ls /dev/sd*|grep -v [0-9]|grep -v sda) > do > echo -ne "$(fdisk -l ${i}|grep ^Disk|grep ${i}|awk '{print $1,$2,$3,$4}') WWID:" > /lib/udev/scsi_id -g -u -d "${i}" > done|sort -nk3 -k5|column -t Disk /dev/sdb: 21.5 GB, WWID:360000000000000000e00000000010001 Disk /dev/sdc: 21.5 GB, WWID:360000000000000000e00000000010002 Disk /dev/sdd: 21.5 GB, WWID:360000000000000000e00000000010003 Disk /dev/sde: 21.5 GB, WWID:360000000000000000e00000000010004 Disk /dev/sdf: 21.5 GB, WWID:360000000000000000e00000000010005 Disk /dev/sdg: 21.5 GB, WWID:360000000000000000e00000000010006 [root@rac1 by-path]#vi /etc/udev/rules.d/99-oracle-asmdevices.rules # 打开文件后填入以下内容 KERNEL=="sd*", ENV{ID_SERIAL}=="360000000000000000e00000000010001", SYMLINK+="asm_data", OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*", ENV{ID_SERIAL}=="360000000000000000e00000000010002", SYMLINK+="asm_bak", OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*", ENV{ID_SERIAL}=="360000000000000000e00000000010003", SYMLINK+="asm_fra", OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*", ENV{ID_SERIAL}=="360000000000000000e00000000010004", SYMLINK+="asm_ocr_1", OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*", ENV{ID_SERIAL}=="360000000000000000e00000000010005", SYMLINK+="asm_ocr_2", OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*", ENV{ID_SERIAL}=="360000000000000000e00000000010006", SYMLINK+="asm_ocr_3", OWNER="grid", GROUP="asmadmin", MODE="0660"使udev生效

udevadm control --reload-rules udevadm trigger -

avahi-daemon 配置

yum install -y avahi* systemctl stop avahi-daemon.socket systemctl stop avahi-daemon.service pgrep -f avahi-daemon | awk '{print "kill -9 "$2}' systemctl disable avahi-daemon.socket systemctl disable avahi-daemon.service cat <<EOF>>/etc/sysconfig/network NOZEROCONF=yes EOF -

时间同步配置

禁用chronyd

yum install -y chrony timedatectl set-timezone Asia/Shanghai systemctl stop chronyd.service systemctl disable chronyd.service配置时间同步定时任务

yum install -y ntpdate ##192.168.100.150为时间服务器IP,每天12点同步系统时间 vim /var/spool/cron/root00 12 * * * /usr/sbin/ntpdate -u 192.168.100.150 && /usr/sbin/hwclock -w##手动执行 /usr/sbin/ntpdate -u 192.168.100.150 && /usr/sbin/hwclock -w

2.RAC安装(RAC1)

2.1节点互信

-

节点互信

上传sshUserSetup.sh脚本到/root

注:这个脚本是Oracle提供的,可以解压Oracle压缩包或者Grid压缩包,查找该脚本上传到rac1即可

sh sshUserSetup.sh -user root -hosts "rac1 rac2" -advanced -noPromptPassphrase sh sshUserSetup.sh -user grid -hosts "rac1 rac2" -advanced -noPromptPassphrase sh sshUserSetup.sh -user oracle -hosts "rac1 rac2" -advanced -noPromptPassphrase

2.2 Grid安装

-

上传Grid文件

把LINUX.X64_193000_grid_home.zip上传到/u01/app/19c/grid

cd /u01/app/19c/grid chown -R grid:oinstall LINUX.X64_193000_grid_home.zip -

切换grid用户解压

su - grid cd $ORACLE_HOME unzip LINUX.X64_193000_grid_home.zip等待解压完毕

-

配置DISPLAY变量

export DISPLAY=192.168.100.103:0.0 ./gridSetup.sh注:IP写自己宿主机的IP地址

-

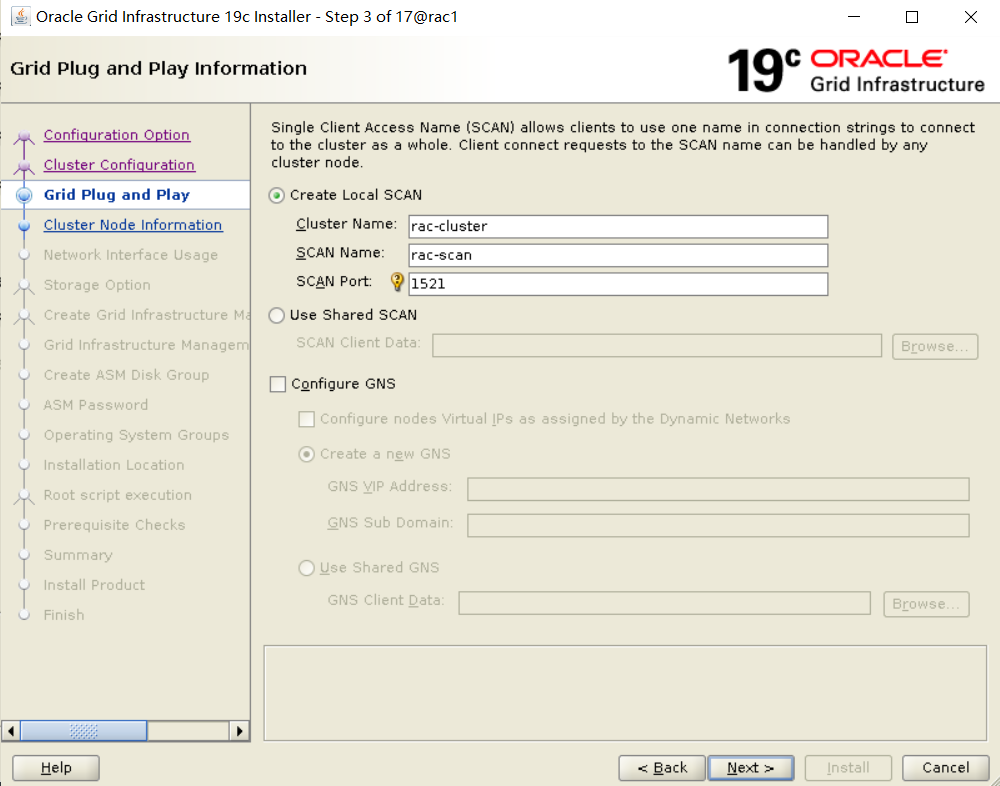

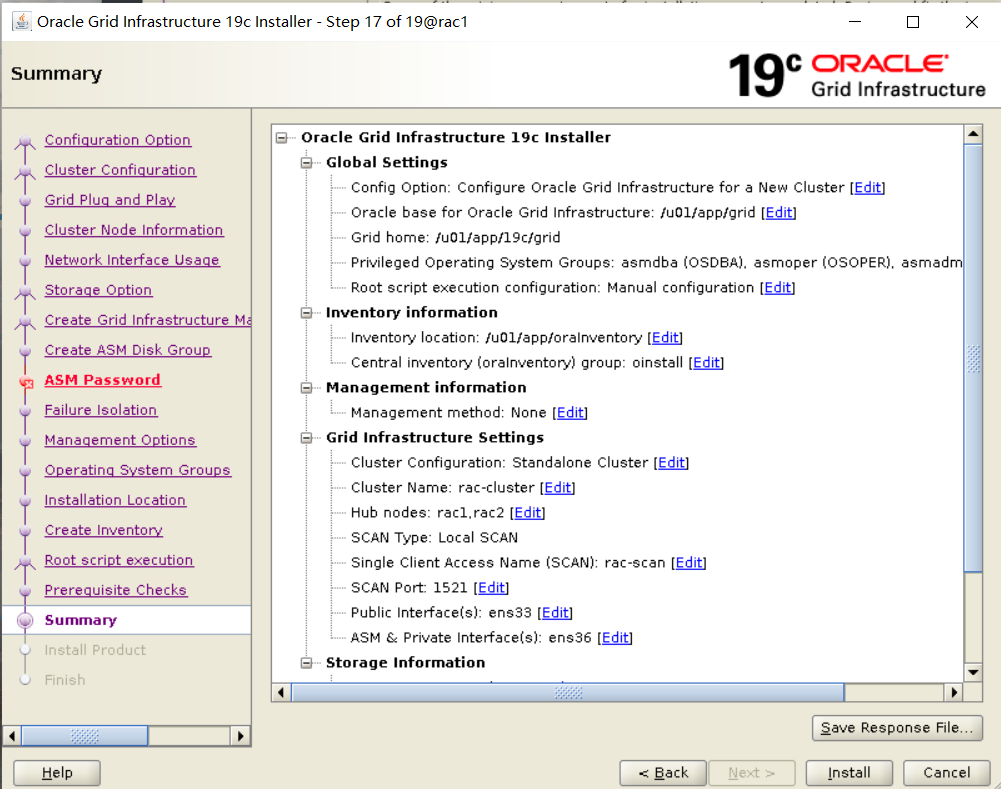

安装过程

这里的SCAN NAME一定要和你/etc/hosts文件里的那个RAC-SCAN-IP的名称保持一致而不是和IP保持一致。

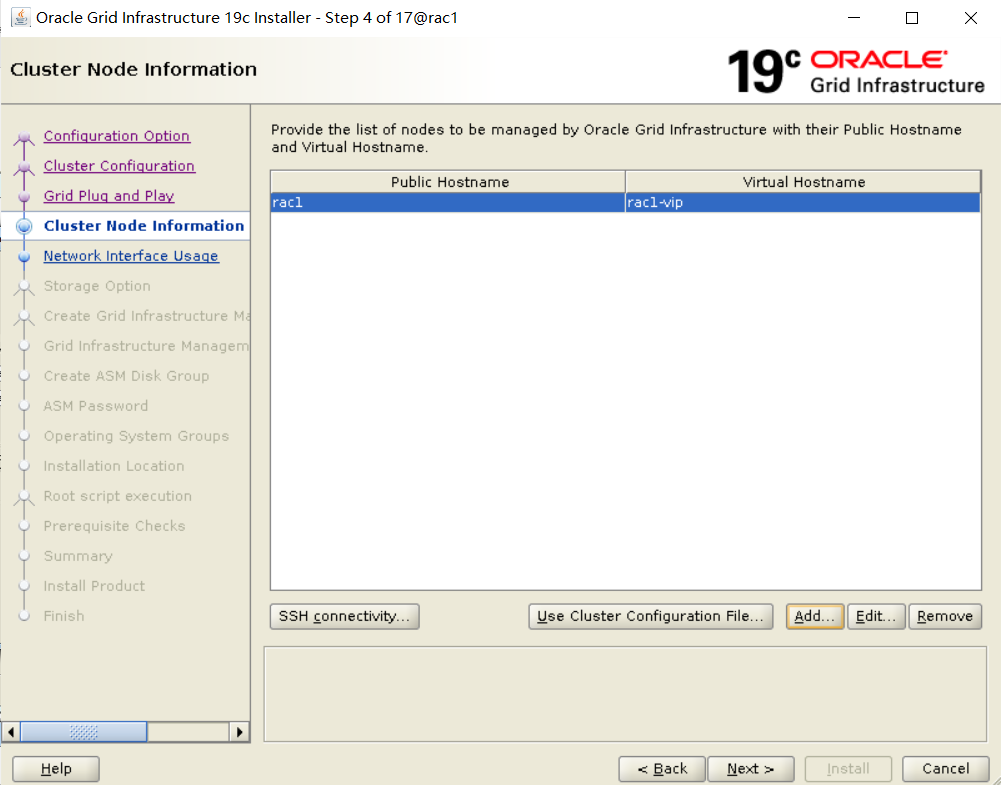

点击Add

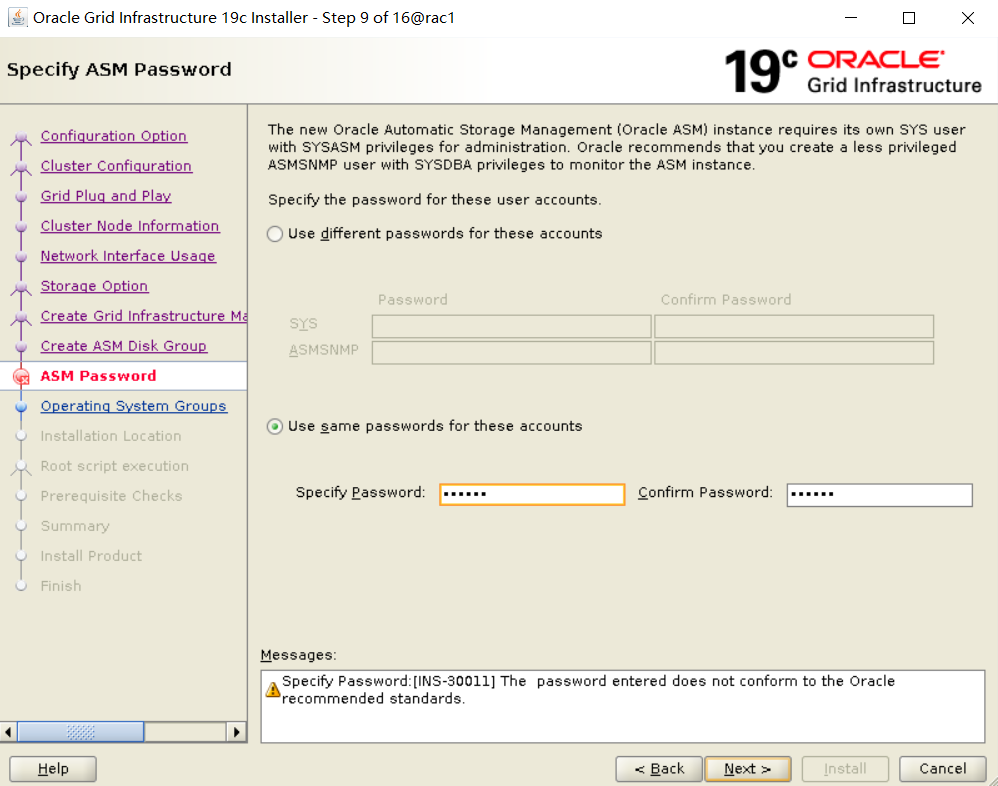

如果密码设置过于简单,会警告密码设置过于简单,点击Yes,继续

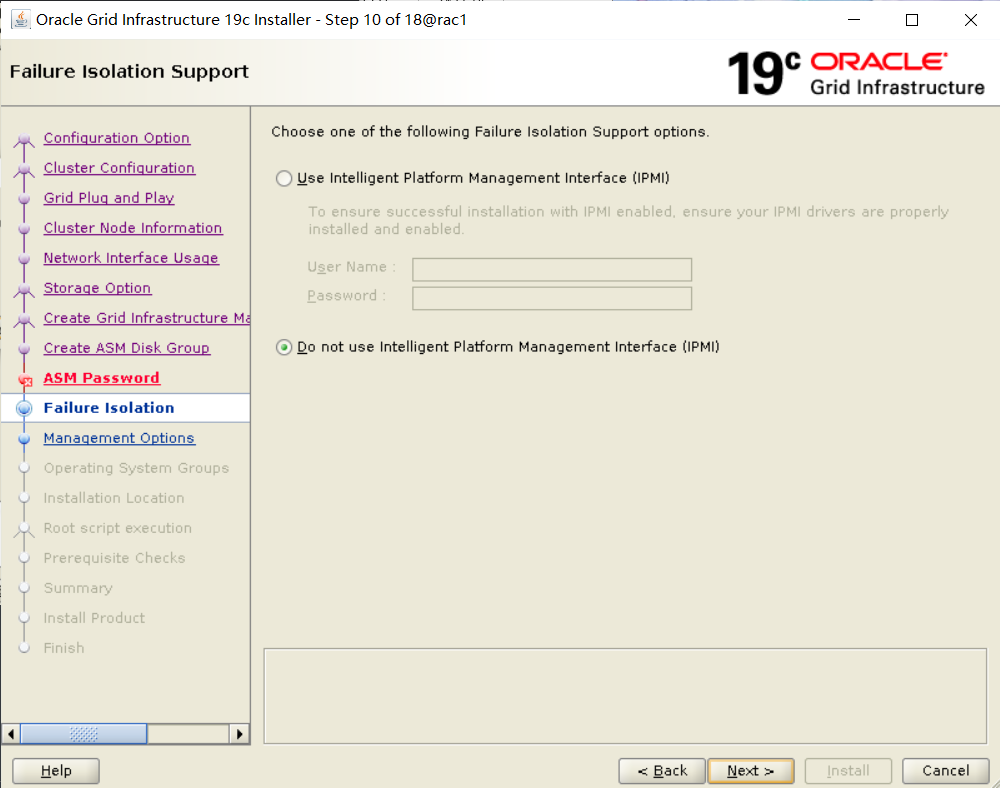

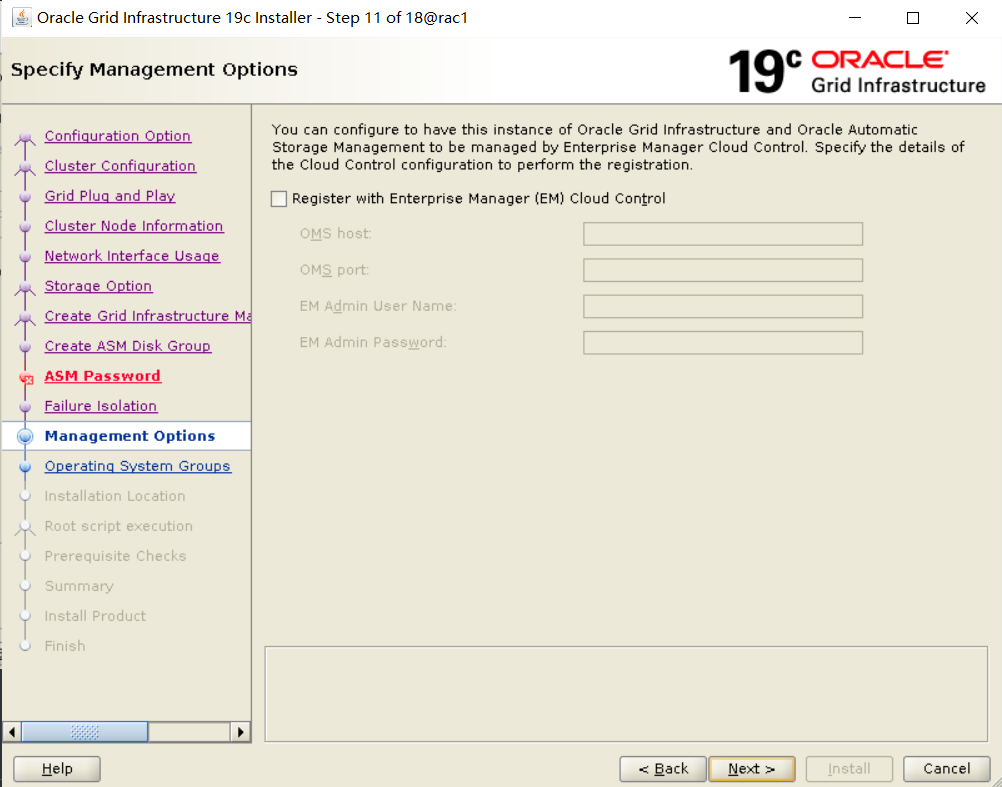

是否注册EMCC,不使用,不配置

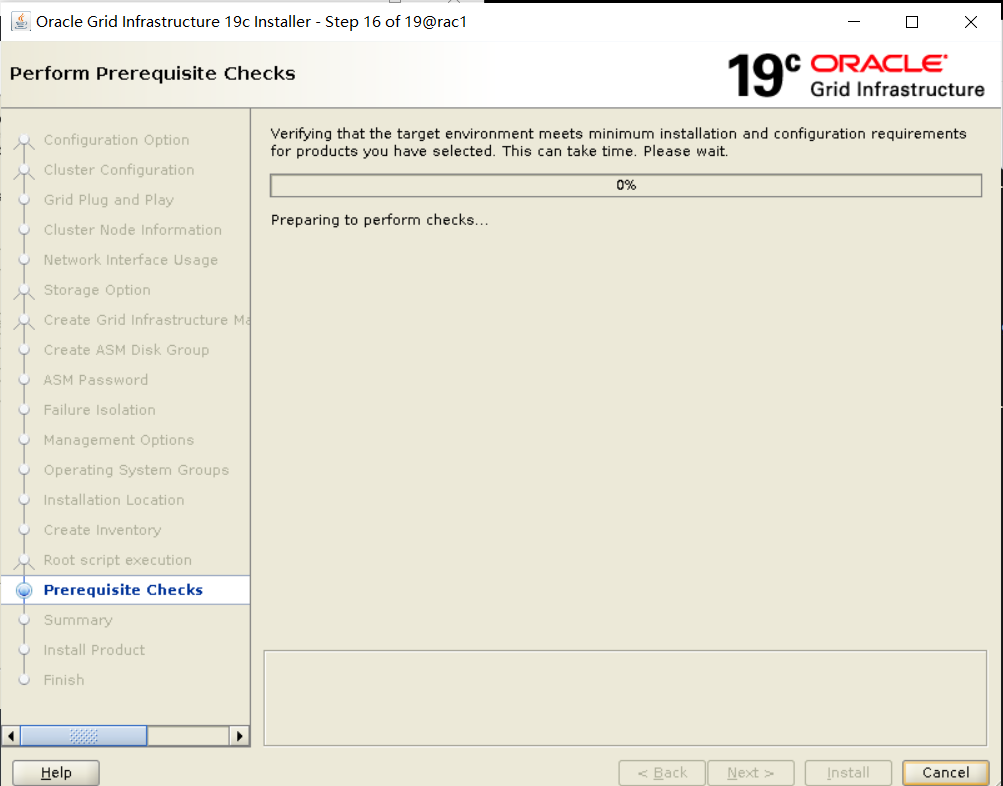

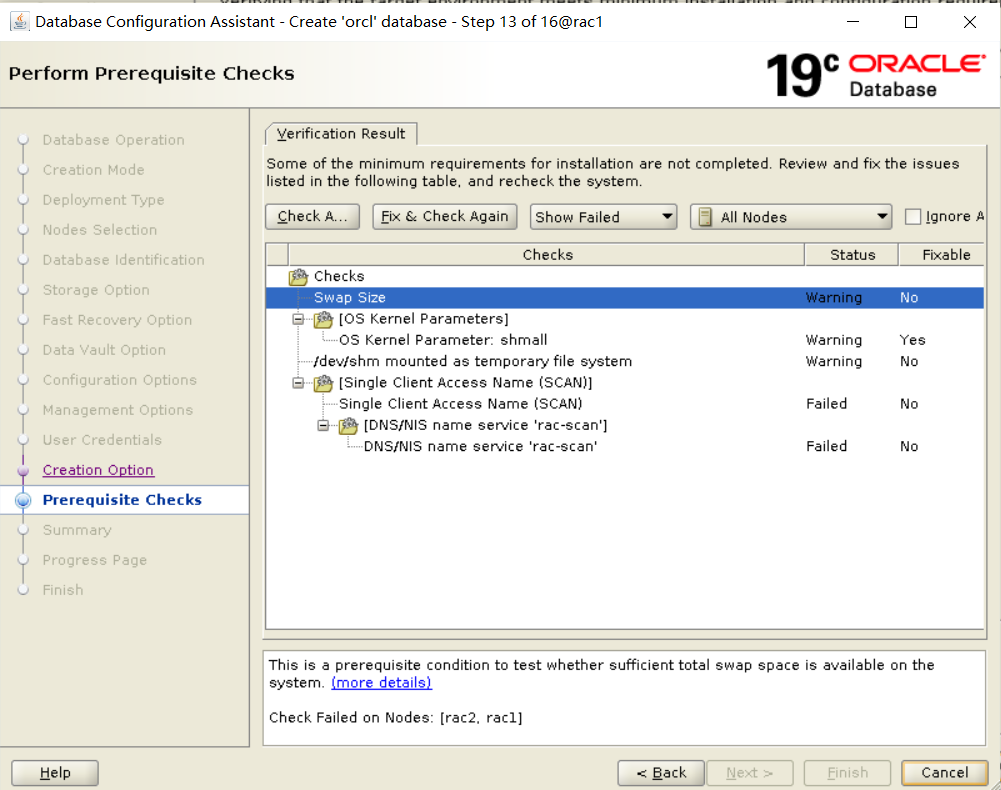

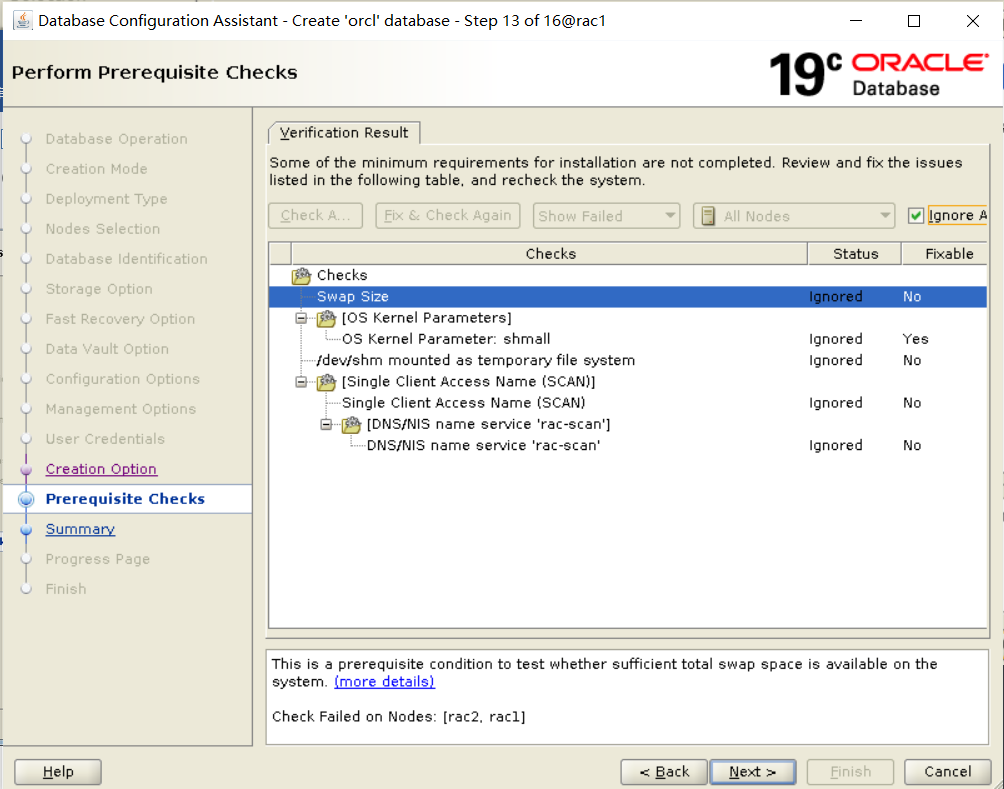

有两个检查状态是失败的,主要是由于没有使用到DNS服务,所以报错,可以忽略。

点击“Yes”

注:以root用户,按rac1,rac2的顺序先后执行这两个脚本,例如orainstRoot在rac1执行完毕后,然后在rac2执行

[root@rac1 ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@rac1 ~]# /u01/app/19c/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/19c/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /u01/app/19c/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/rac1/crsconfig/rootcrs_rac1_2022-04-22_10-50-07PM.log

2022/04/22 22:50:30 CLSRSC-594: Executing installation step 1 of 19: 'SetupTFA'.

2022/04/22 22:50:31 CLSRSC-594: Executing installation step 2 of 19: 'ValidateEnv'.

2022/04/22 22:50:31 CLSRSC-363: User ignored prerequisites during installation

2022/04/22 22:50:31 CLSRSC-594: Executing installation step 3 of 19: 'CheckFirstNode'.

2022/04/22 22:50:34 CLSRSC-594: Executing installation step 4 of 19: 'GenSiteGUIDs'.

2022/04/22 22:50:35 CLSRSC-594: Executing installation step 5 of 19: 'SetupOSD'.

2022/04/22 22:50:35 CLSRSC-594: Executing installation step 6 of 19: 'CheckCRSConfig'.

2022/04/22 22:50:35 CLSRSC-594: Executing installation step 7 of 19: 'SetupLocalGPNP'.

2022/04/22 22:51:14 CLSRSC-594: Executing installation step 8 of 19: 'CreateRootCert'.

2022/04/22 22:51:19 CLSRSC-594: Executing installation step 9 of 19: 'ConfigOLR'.

2022/04/22 22:51:31 CLSRSC-594: Executing installation step 10 of 19: 'ConfigCHMOS'.

2022/04/22 22:51:31 CLSRSC-594: Executing installation step 11 of 19: 'CreateOHASD'.

2022/04/22 22:52:01 CLSRSC-594: Executing installation step 12 of 19: 'ConfigOHASD'.

2022/04/22 22:52:13 CLSRSC-330: Adding Clusterware entries to file 'oracle-ohasd.service'

2022/04/22 22:52:17 CLSRSC-4002: Successfully installed Oracle Trace File Analyzer (TFA) Collector.

2022/04/22 22:53:09 CLSRSC-594: Executing installation step 13 of 19: 'InstallAFD'.

2022/04/22 22:53:13 CLSRSC-594: Executing installation step 14 of 19: 'InstallACFS'.

2022/04/22 22:53:18 CLSRSC-594: Executing installation step 15 of 19: 'InstallKA'.

2022/04/22 22:53:23 CLSRSC-594: Executing installation step 16 of 19: 'InitConfig'.

ASM has been created and started successfully.

[DBT-30001] Disk groups created successfully. Check /u01/app/grid/cfgtoollogs/asmca/asmca-220422PM105407.log for details.

2022/04/22 22:55:46 CLSRSC-482: Running command: '/u01/app/19c/grid/bin/ocrconfig -upgrade grid oinstall'

CRS-4256: Updating the profile

Successful addition of voting disk b1f361bac9144f9cbfa05a20dc3e1a67.

Successfully replaced voting disk group with +CSDG.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE b1f361bac9144f9cbfa05a20dc3e1a67 (/dev/sde) [CSDG]

Located 1 voting disk(s).

2022/04/22 22:57:46 CLSRSC-594: Executing installation step 17 of 19: 'StartCluster'.

2022/04/22 22:59:37 CLSRSC-343: Successfully started Oracle Clusterware stack

2022/04/22 22:59:38 CLSRSC-594: Executing installation step 18 of 19: 'ConfigNode'.

2022/04/22 23:02:49 CLSRSC-594: Executing installation step 19 of 19: 'PostConfig'.

2022/04/22 23:04:30 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

[root@rac1 ~]#

点击“OK”

报错,主要还是DNS问题,因为我们不使用DNS,所以可以忽略,点击OK

点击“next”,安装完毕

点击“Yes”

点击“close”,安装完毕

-

查看集群状态

[grid@rac1:/home/grid]$crsctl stat res -t -------------------------------------------------------------------------------- Name Target State Server State details -------------------------------------------------------------------------------- Local Resources -------------------------------------------------------------------------------- ora.LISTENER.lsnr ONLINE ONLINE rac1 STABLE ONLINE ONLINE rac2 STABLE ora.chad ONLINE ONLINE rac1 STABLE ONLINE ONLINE rac2 STABLE ora.net1.network ONLINE ONLINE rac1 STABLE ONLINE ONLINE rac2 STABLE ora.ons ONLINE ONLINE rac1 STABLE ONLINE ONLINE rac2 STABLE -------------------------------------------------------------------------------- Cluster Resources -------------------------------------------------------------------------------- ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup) 1 ONLINE ONLINE rac1 STABLE 2 ONLINE ONLINE rac2 STABLE 3 OFFLINE OFFLINE STABLE ora.CSDG.dg(ora.asmgroup) 1 ONLINE ONLINE rac1 STABLE 2 ONLINE ONLINE rac2 STABLE 3 OFFLINE OFFLINE STABLE ora.LISTENER_SCAN1.lsnr 1 ONLINE ONLINE rac1 STABLE ora.asm(ora.asmgroup) 1 ONLINE ONLINE rac1 Started,STABLE 2 ONLINE ONLINE rac2 Started,STABLE 3 OFFLINE OFFLINE STABLE ora.asmnet1.asmnetwork(ora.asmgroup) 1 ONLINE ONLINE rac1 STABLE 2 ONLINE ONLINE rac2 STABLE 3 OFFLINE OFFLINE STABLE ora.cvu 1 ONLINE ONLINE rac1 STABLE ora.qosmserver 1 ONLINE ONLINE rac1 STABLE ora.rac1.vip 1 ONLINE ONLINE rac1 STABLE ora.rac2.vip 1 ONLINE ONLINE rac2 STABLE ora.scan1.vip 1 ONLINE ONLINE rac1 STABLE --------------------------------------------------------------------------------- 查看rac1的IP地址

[grid@rac1:/home/grid]$ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:6c:2b:40 brd ff:ff:ff:ff:ff:ff inet 192.168.100.101/24 brd 192.168.100.255 scope global noprefixroute ens33 valid_lft forever preferred_lft forever inet 192.168.100.200/24 brd 192.168.100.255 scope global secondary ens33:1 valid_lft forever preferred_lft forever inet 192.168.100.201/24 brd 192.168.100.255 scope global secondary ens33:2 valid_lft forever preferred_lft forever inet6 fe80::effb:10f8:9580:34e/64 scope link noprefixroute valid_lft forever preferred_lft forever 3: ens36: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:6c:2b:4a brd ff:ff:ff:ff:ff:ff inet 10.10.10.101/24 brd 10.10.10.255 scope global noprefixroute ens36 valid_lft forever preferred_lft forever inet 169.254.6.205/19 brd 169.254.31.255 scope global ens36:1 valid_lft forever preferred_lft forever inet6 fe80::e654:5dde:a383:d4cf/64 scope link noprefixroute valid_lft forever preferred_lft forever 4: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000 link/ether 52:54:00:04:9d:96 brd ff:ff:ff:ff:ff:ff inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0 valid_lft forever preferred_lft forever 5: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN group default qlen 1000 link/ether 52:54:00:04:9d:96 brd ff:ff:ff:ff:ff:ff

3.数据库安装(RAC1)

3.1上传安装包

-

以root用户上传LINUX.X64_193000_db_home.zip到/u01/app/oracle/product/19c/db_1下

-

更改所属用户,所属组

[root@rac1 ~]# cd /u01/app/oracle/product/19c/db_1 [root@rac1 db_1]# chown -R oracle:oinstall LINUX.X64_193000_db_home.zip

3.2 解压缩

-

切换用户

[root@rac1 db_1]# su - oracle Last login: Tue Apr 19 09:24:47 CST 2022 on pts/0 -

切换目录

[oracle@rac1:/home/oracle]$cd $ORACLE_HOME [oracle@rac1:/u01/app/oracle/product/19c/db_1]$cd /u01/app/oracle/product/19c/db_1 -

解压

unzip LINUX.X64_193000_db_home.zip

3.3 安装数据库

-

设置DISPLAY变量

[oracle@rac1:/u01/app/oracle/product/19c/db_1] export DISPLAY=192.168.100.66:0.0 -

运行安装程序

[oracle@rac1:/u01/app/oracle/product/19c/db_1] ./runInstaller注:

1.要投影安装界面的主机(192.168.100.66)的防火墙要关闭,否则会报类似以下错误,

2.如果使用Xshell连接的服务器或者虚拟机的话,投影的机器或者宿主机要安装xMananger。

[oracle@rac1:/u01/app/oracle/product/19c/db_1]$./runInstaller ERROR: Unable to verify the graphical display setup. This application requires X display. Make sure that xdpyinfo exist under PATH variable. Can't connect to X11 window server using '192.168.100.66:0.0' as the value of the DISPLAY variable. -

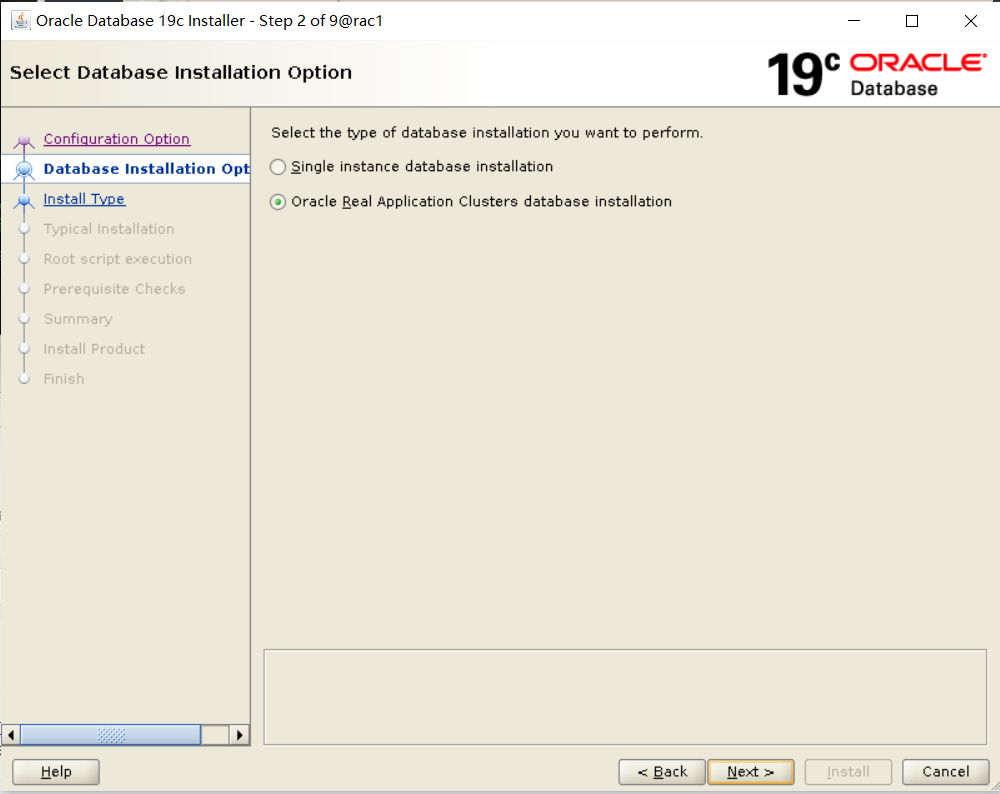

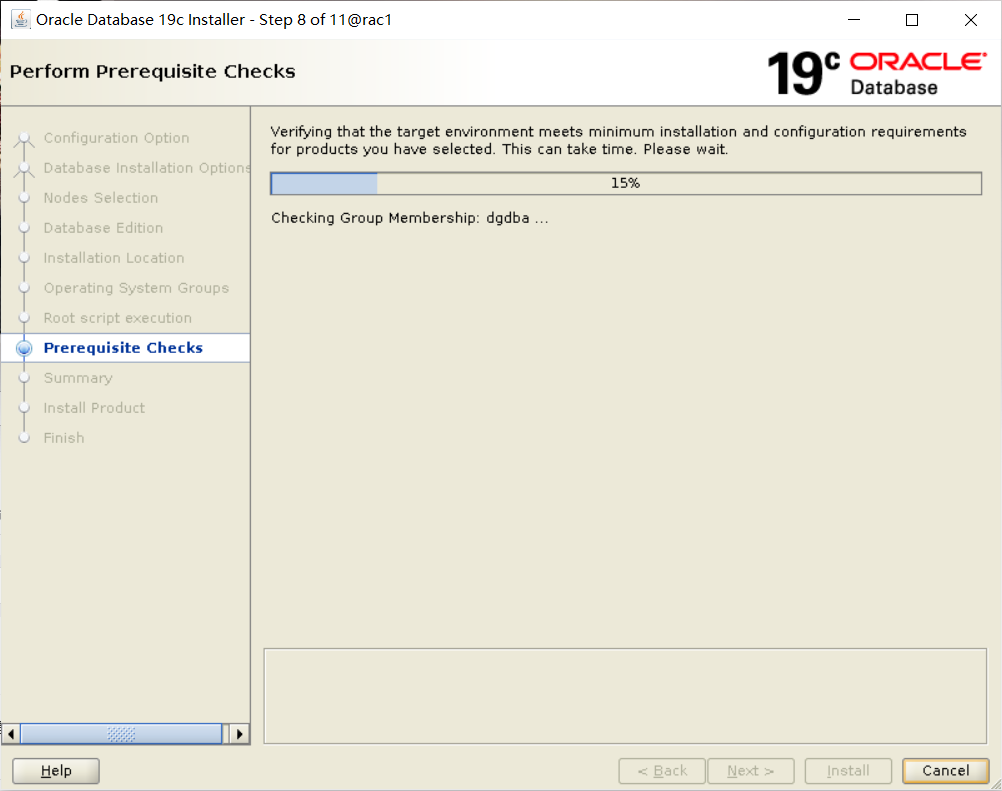

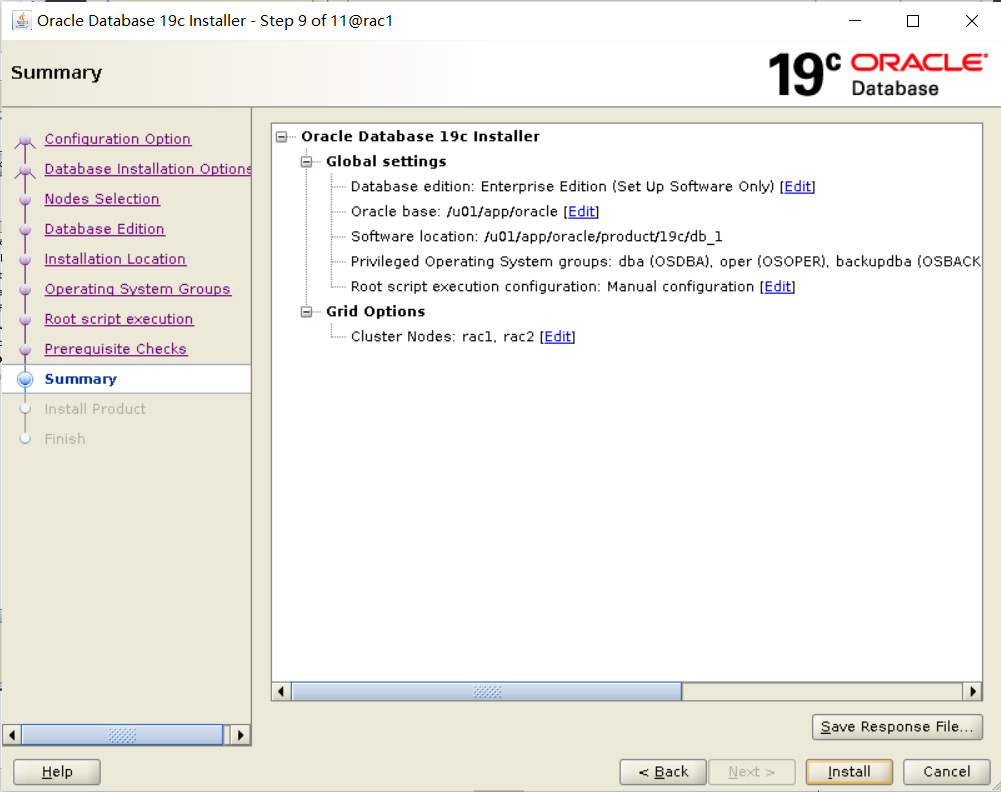

安装过程

注:只安装数据库软件,安装数据库软件成功后,再创建实例。

主要是DNS的问题, 直接忽略即可。

点击“Yes”

分别以root用户在rac1和rac2上运行该脚本

点击“Close”,安装完成

4.建库(RAC1)

4.1 调取安装界面

切换到grid用户,调取安装界面创建数据库

[root@rac1 ~]# su - grid

Last login: Thu Apr 28 20:19:54 CST 2022

[grid@rac1:/home/grid]$export DISPLAY=192.168.100.66:0.0

[grid@rac1:/home/grid]$asmca

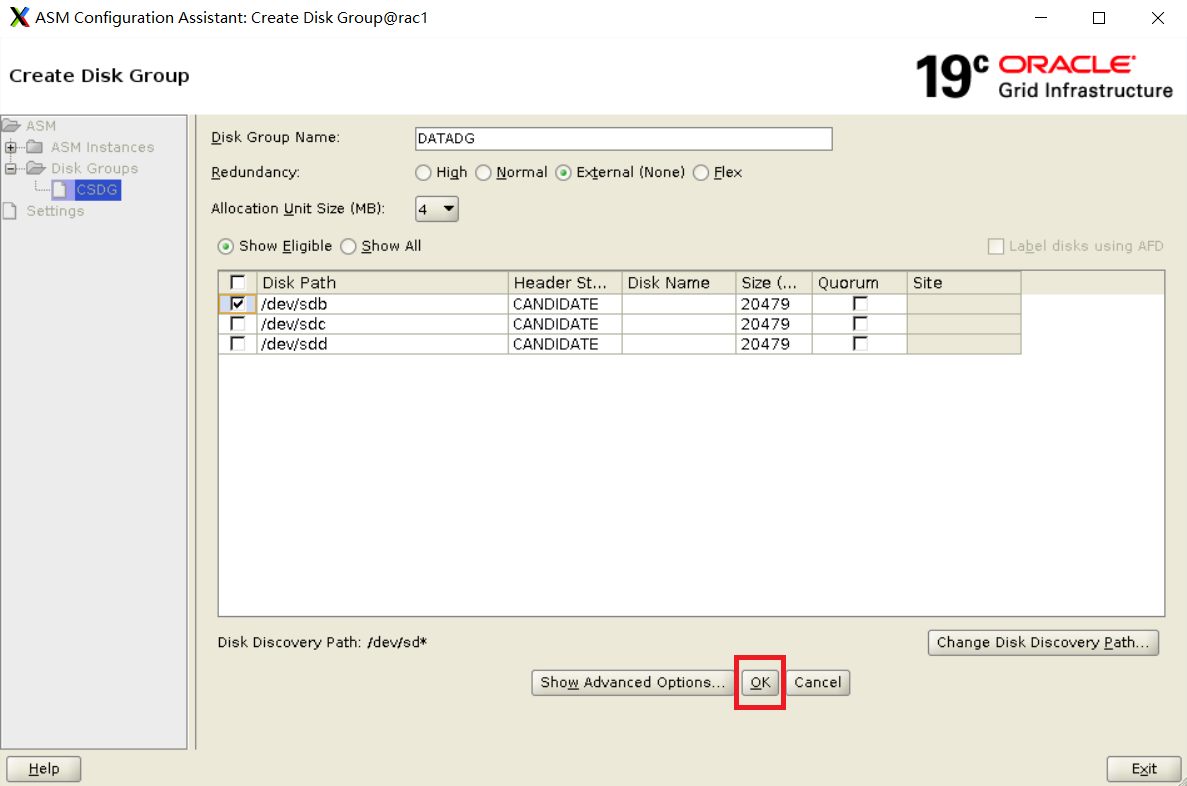

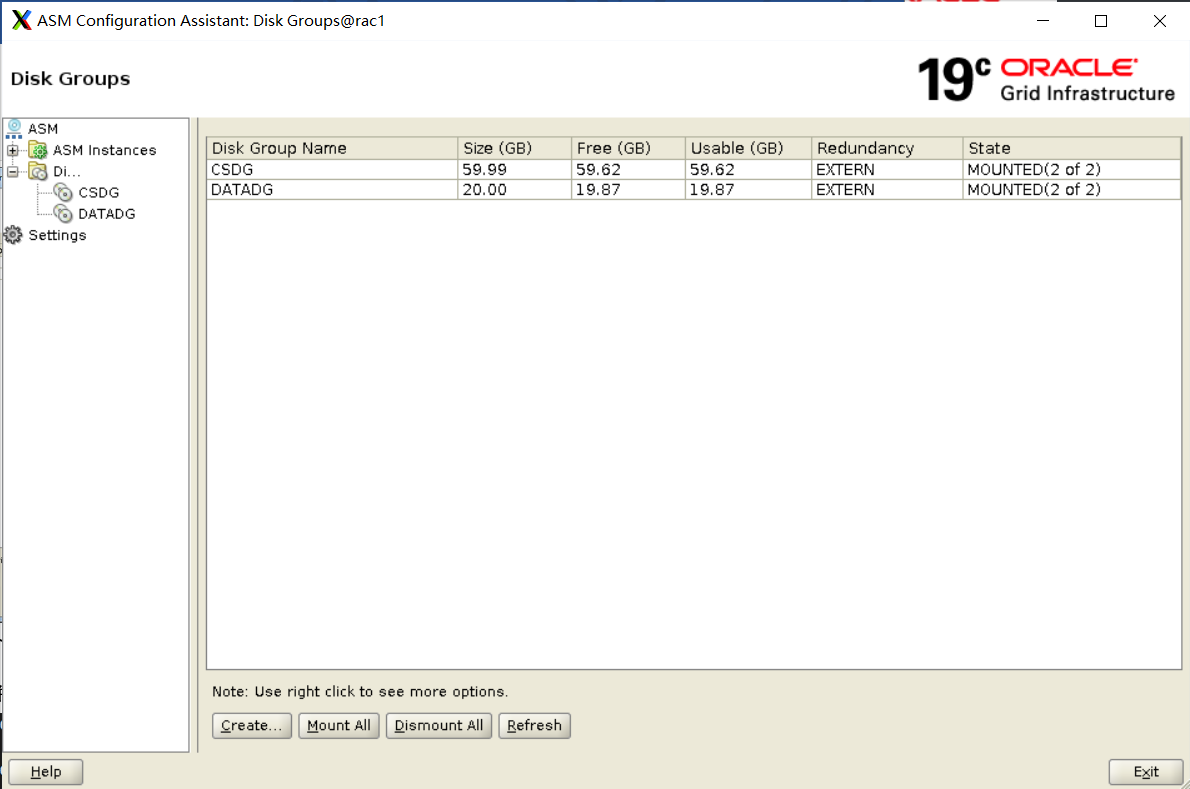

4.2 创建ASM的DG

关闭对话框

4.2 创建数据库

-

切换到oracle用户并调用安装界面

[grid@rac1:/home/grid]$su - oracle Password: Last login: Mon Apr 25 21:42:45 CST 2022 [oracle@rac1:/home/oracle]$export DISPLAY=192.168.100.66:0.0 [oracle@rac1:/home/oracle]$dbca -

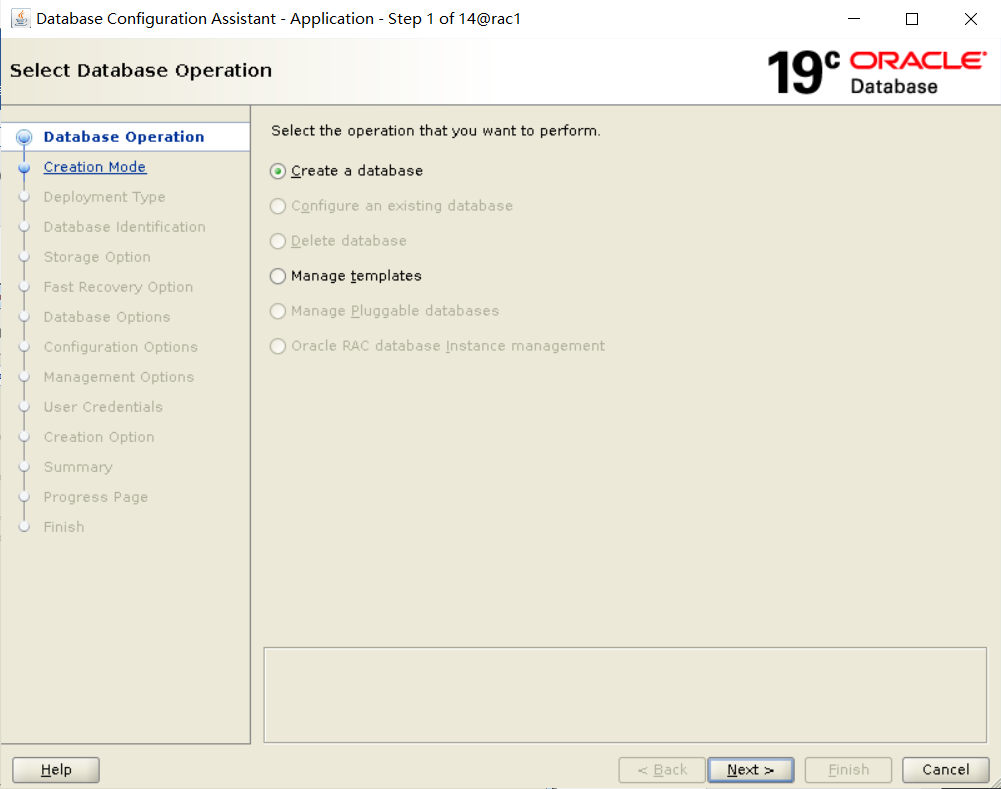

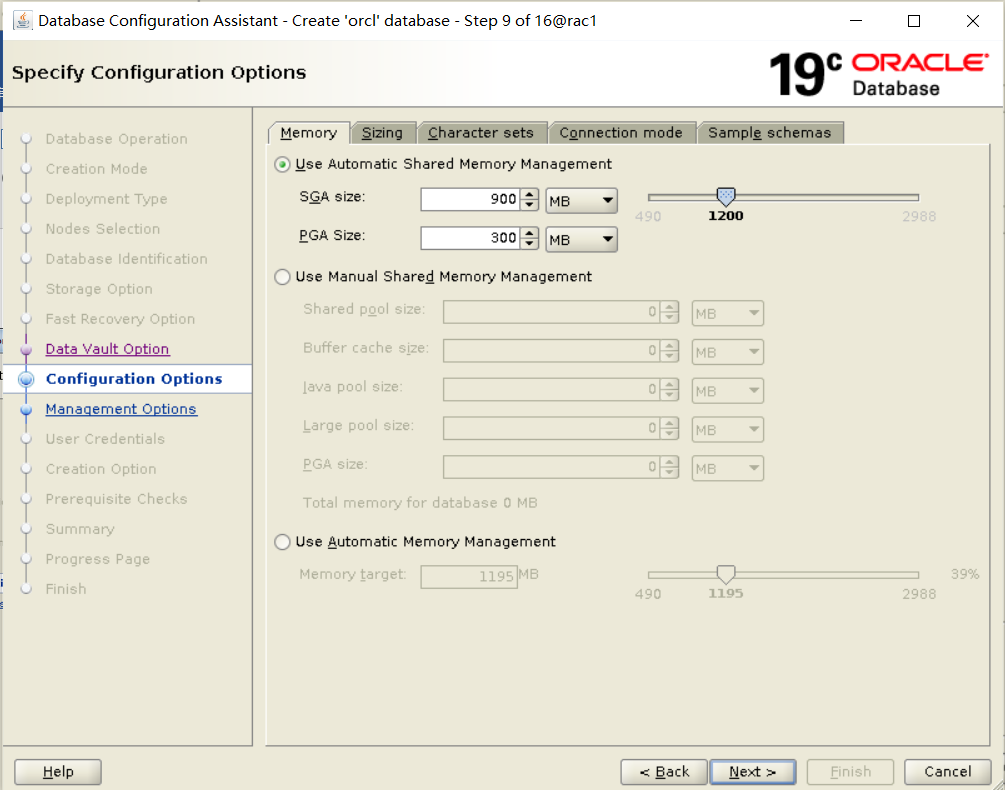

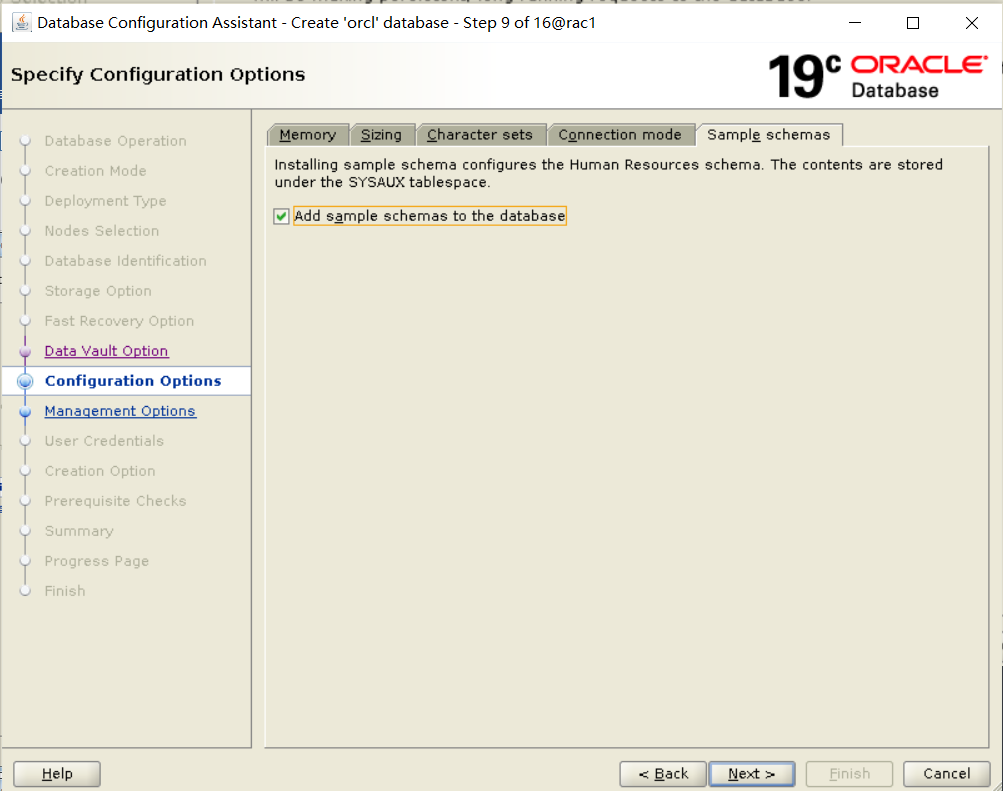

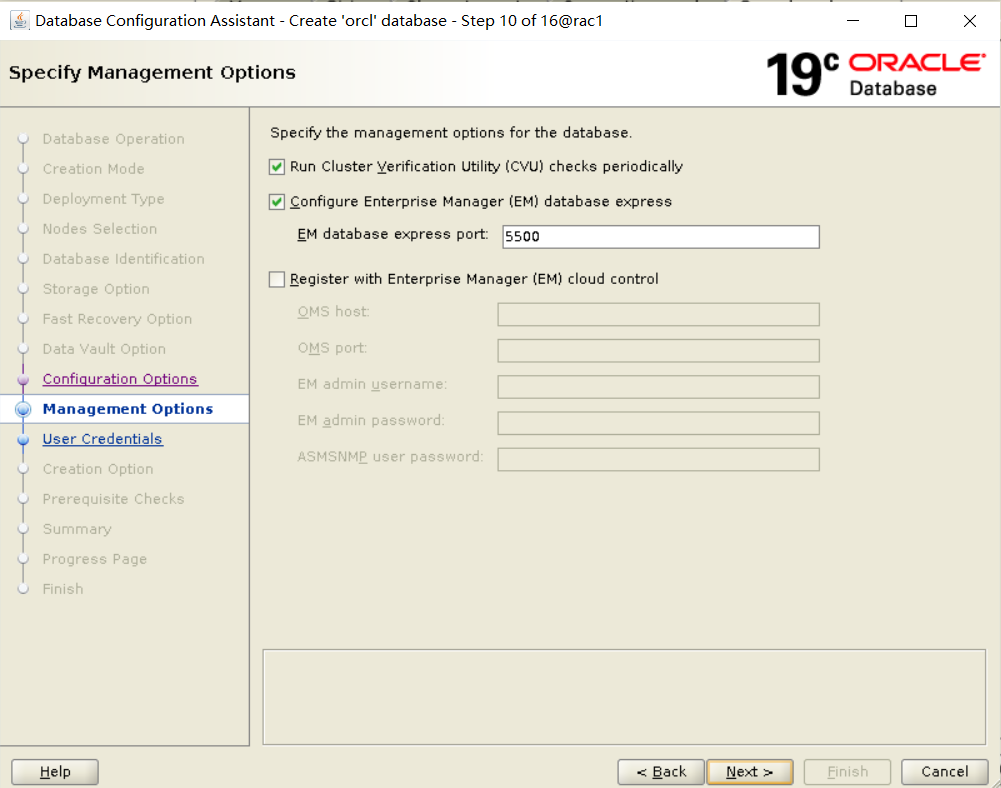

创建过程

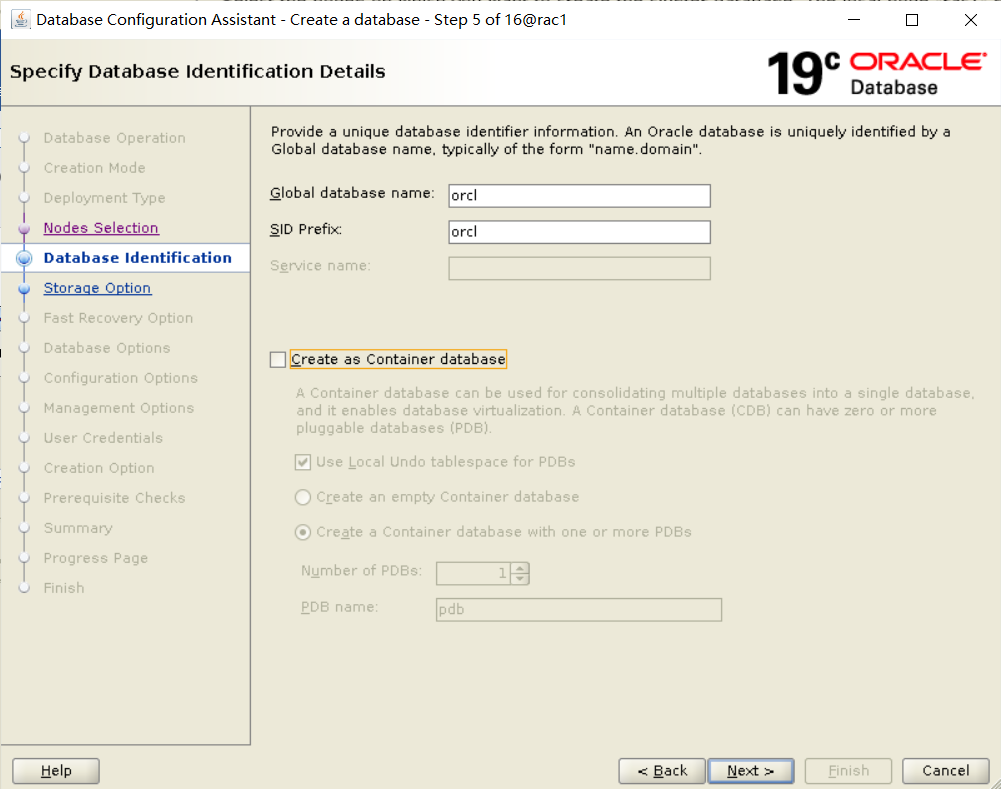

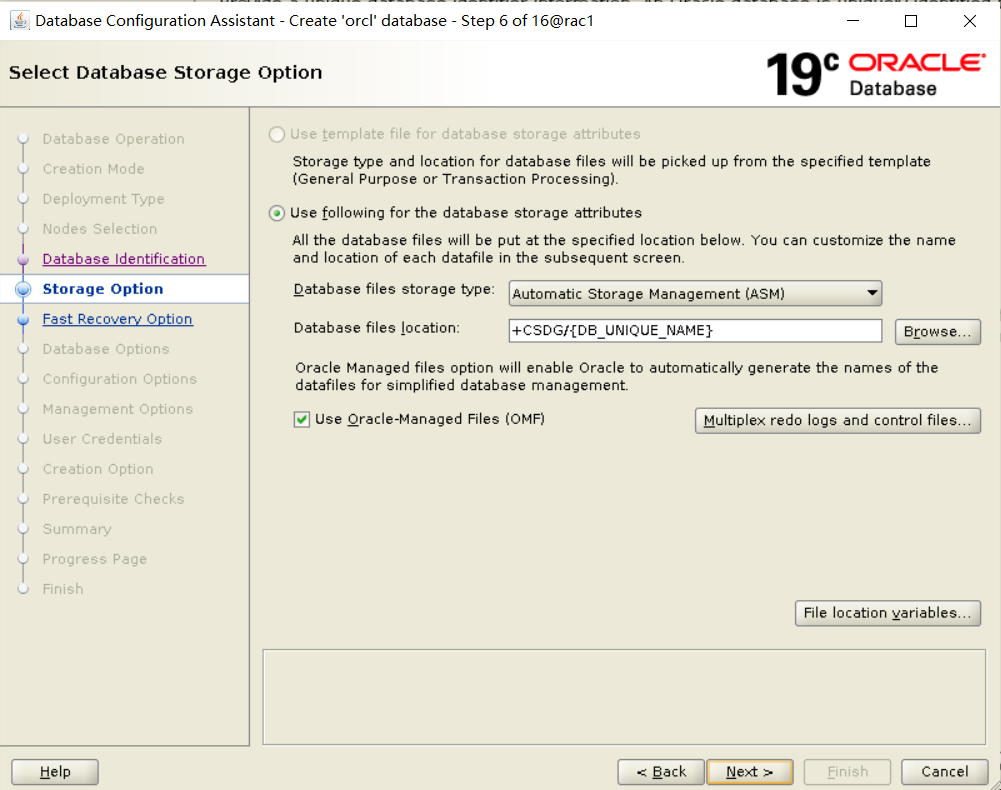

注:可以不创建容器数据库,直接创建数据库,也可以根据需求创建容器数据库后,创建可插拔数据库。

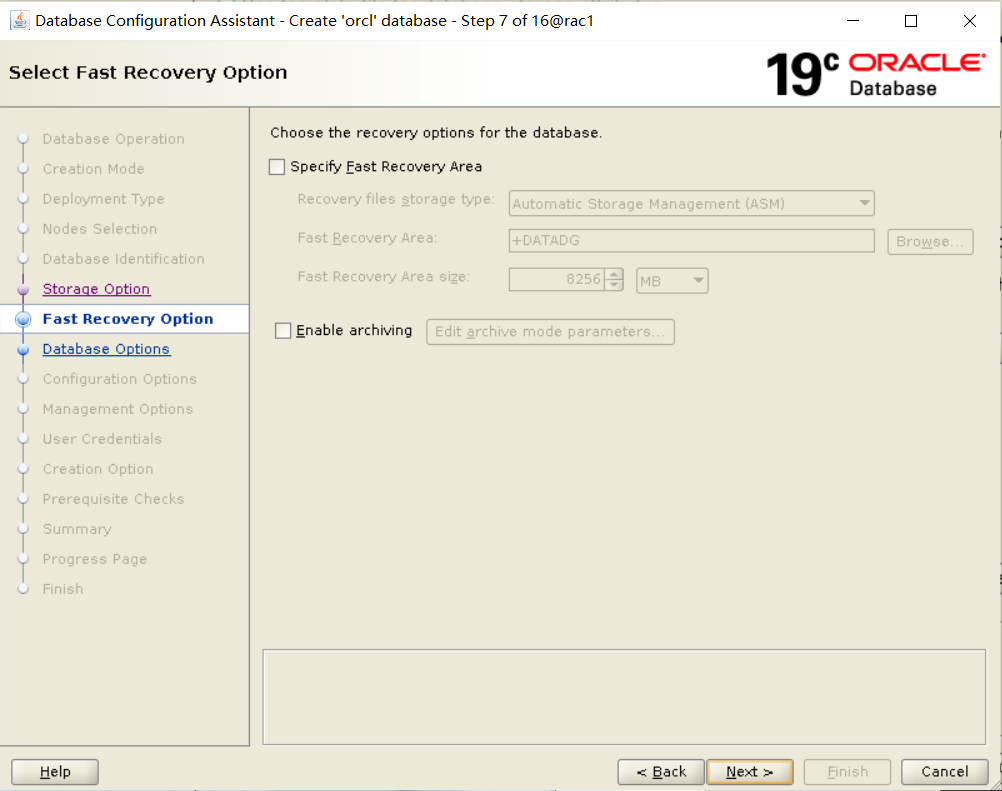

注:根据需求,决定是否开启归档,也可以在创建数据库后,开启归档,这里暂时不开启归档。

注:选择字符AL32UTF8字符集

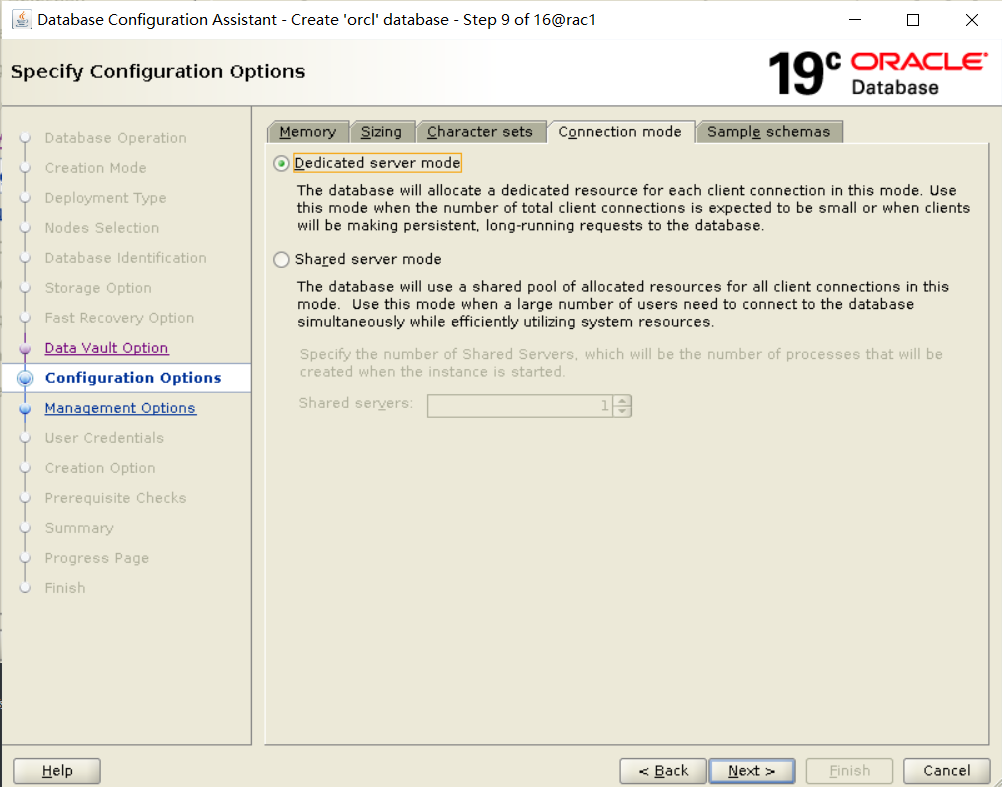

注:根据需求选择是共享服务器模式还是独占服务器模式

直接忽略

到此结束

文章来源: 博客园

- 还没有人评论,欢迎说说您的想法!

客服

客服